AI Meets VR in New Nvidia Tech

By John P. Mello Jr.

Dec 4, 2018 5:00 AM PT

Nvidia on Monday announced a breakthrough in 3D rendering research that may have far-reaching ramifications for future virtual worlds.

A team led by Nvidia Vice President Bryan Catanzaro discovered a way to use a neural network to render synthetic 3D environments in real time, using a model trained on real-world videos.

Now, each object in a virtual world has to be modeled individually. With Nvidia's technology, worlds can be populated with objects "learned" from video input.

Nvidia's technology offers the potential to quickly create virtual worlds for gaming, automotive, architecture, robotics or virtual reality. The network can, for example, generate interactive scenes based on real-world locations or show consumers dancing like their favorite pop stars.

"Nvidia has been inventing new ways to generate interactive graphics for 25 years, and this is the first time we can do so with a neural network," Catanzaro said.

"Neural networks -- specifically generative models -- will change how graphics are created," he added. "This will enable developers to create new scenes at a fraction of the traditional cost."

Learning From Video

The research currently is on display at the NeurIPS conference in Montreal, Canada, a show for artificial intelligence researchers.

Nvidia's team created a simple driving game for the conference that allows attendees to interactively navigate an AI-generated environment.

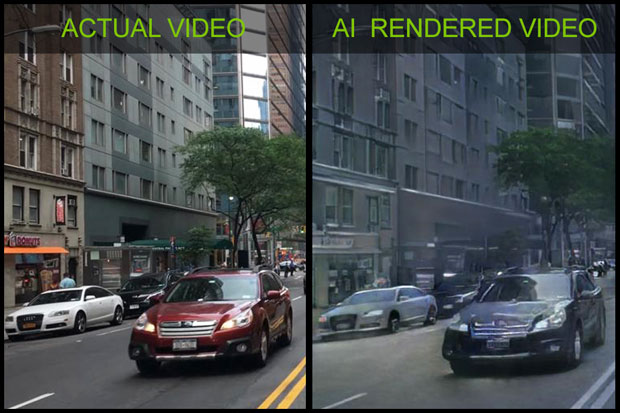

The virtual urban environment rendered by a neural network was trained on videos of real-life urban environments. The network learned to model the appearance of the world, including lighting, materials and their dynamics.

Since the output is synthetically generated, a scene easily can be edited to remove, modify or add objects.

Reducing Labor Overhead

Rendering 3D graphics is a labor-intensive process right now. Nvidia's technology could change that in the future.

"This is cool because it's using deep learning to cut down on what has traditionally been a very manual and resource-intensive activity," said Tuong Nguyen, an analyst with Gartner, a research and advisory company based in Stamford, Connecticut.

"This has applications wherever 3D graphics are used -- video games, augmented reality, virtual reality, TV and movies," he told TechNewsWorld.

"It frees up the graphic professionals' time so they can do other things, such as improve on a scene's quality with additional details," Nguyen added. "The idea is to lay the foundation, or at least do a lot of the heavy lifting, so you can spend more time and energy on making a project stand out in many other different ways."

"Developers and users of virtual environments will especially benefit from the new technology," noted Tamar Shinar, an assistant professor in the department of computer science and engineering at the University of California, Riverside.

"It potentially replaces the laborious process of designing the appearance of a virtual world, and expensive methods to render it photorealistically, with a process based on video input and computation at interactive rates," she told TechNewsWorld.

"It enables the rendering of virtual environments from video data," Shinar continued. "This novel approach to interactive rendering of virtual environments opens many possibilities for interactive applications such as games, telecommunication and training simulators."

Competition for Hollywood

By taking the drudgery out of 3D rendering, Nvidia's technology also could bring into the market players that previously had been priced out of it.

"Currently, the creation of 3D content and scenes has been very labor-intensive and limited to companies with big budgets -- primarily games companies," said Bill Orner, a senior member of IEEE, a technical professional organization with corporate headquarters in New York City.

"This deep learning model will enable other industries that don't have 'Hollywood' budgets to create 3D interactive tools," he told TechNewsWorld.

"One thing that artificial intelligence and machine learning does is take the human out of some of the process," explained Michael Goodman, director for digital media in the Newton, Massachusetts, office of Strategy Analytics, a research, advisory and analytics firm.

"That allows a lot of money to be saved," he told TechNewsWorld.

That could be good news for content producers for virtual reality headsets.

"Currently, VR content creation is prohibitively costly, and it is difficult to create the kinds of experiences consumers are looking for," explained Kristen Hanich, a research analyst with Dallas, Texas-based Parks Assocates, a market research and consulting company specializing in consumer technology products.

"Lowering the barrier to entry should help with the VR industry's content problem -- there's a lack of it," she told TechNewsWorld.

Nevertheless, Nvidia has some work to do before the promise of its deep learning technology can be fulfilled.

"While interesting, the technology is in its early stages," observed Parks Associates analyst Craig Leslie Sr.

"The graphics aren't photorealistic, showing the fuzziness encountered with many AI-generated images," he told TechNewsWorld. "It will require significant improvement before it will be considered market ready."

Simulating Bad Behavior

The Nvidia technology also may find a home in the automotive industry.

"A computer's ability to quickly read and understand real-life environments is a critical piece of the self-driving future," said Eric Yaverbaum, CEO of Ericho Communications, a public relations firm in New York City.

"These deep-learning tools could make it easier for cars to make sense of the world around them and navigate their surroundings with less chance for error," he told TechNewsWorld.

"As much as this technology can be used to create rich 3D worlds for gaming technologies," he added, "its application in automobiles seems more profound. It could give AI-driven cars a more accurate computer model that would dramatically improve passenger safety."

A problem currently faced by self-driving car developers is simulating real-life driving environments.

"Traffic models now are too simplistic," said Richard Wallace, transportation systems analysis director for the Center for Automotive Research, a nonprofit automotive research organization in Ann Arbor, Michigan.

"Simulation drivers are too well-behaved. We need more realism," he told TechNewsWorld.

"The industry is beginning to realize that these AI systems can never drive enough real-world miles to get all the learning they need to drive a vehicle, so simulation is starting to become prevalent everywhere," Wallace added. "Nvidia's technology could be very useful for that." ![]()

from TechNewsWorld https://ift.tt/2APd6bL https://ift.tt/2BPWRwR

via IFTTT

ذات الصلة

اشترك في نشرتنا الإخبارية

John P. Mello Jr. has been an ECT News Network reporter since 2003. His areas of focus include cybersecurity, IT issues, privacy, e-commerce, social media, artificial intelligence, big data and consumer electronics. He has written and edited for numerous publications, including the Boston Business Journal, the Boston Phoenix, Megapixel.Net and Government Security News.

John P. Mello Jr. has been an ECT News Network reporter since 2003. His areas of focus include cybersecurity, IT issues, privacy, e-commerce, social media, artificial intelligence, big data and consumer electronics. He has written and edited for numerous publications, including the Boston Business Journal, the Boston Phoenix, Megapixel.Net and Government Security News.

Post a Comment